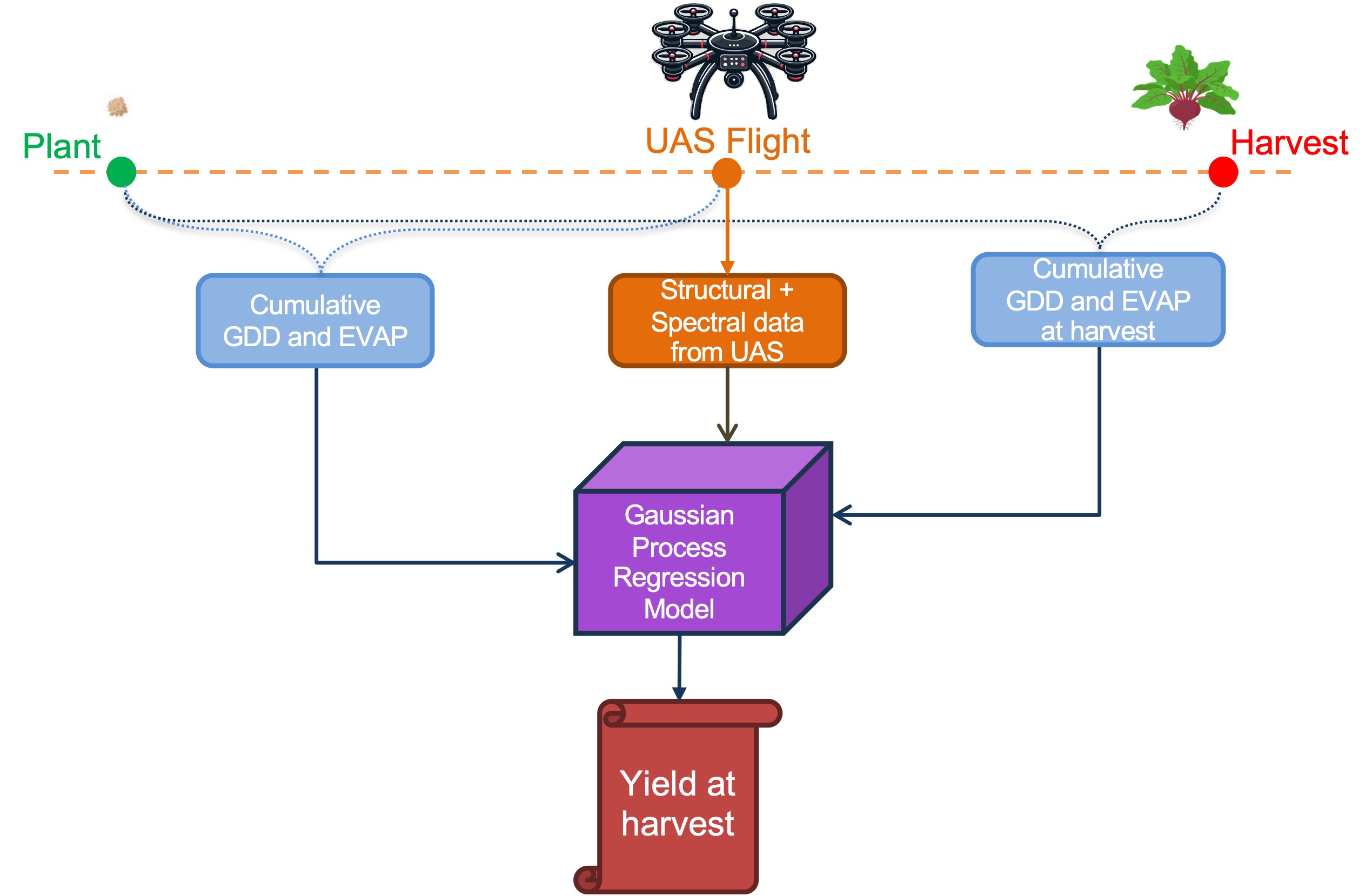

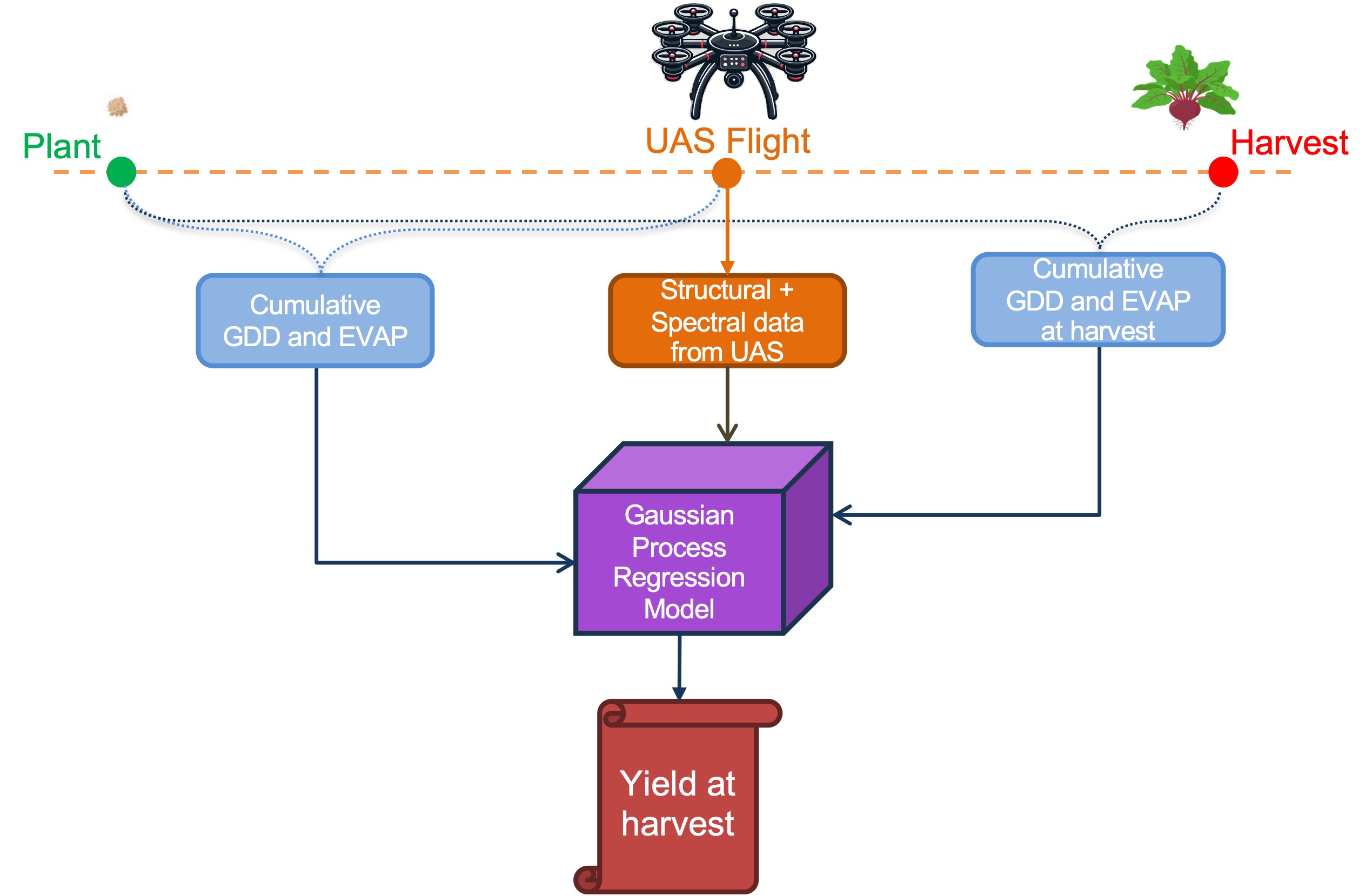

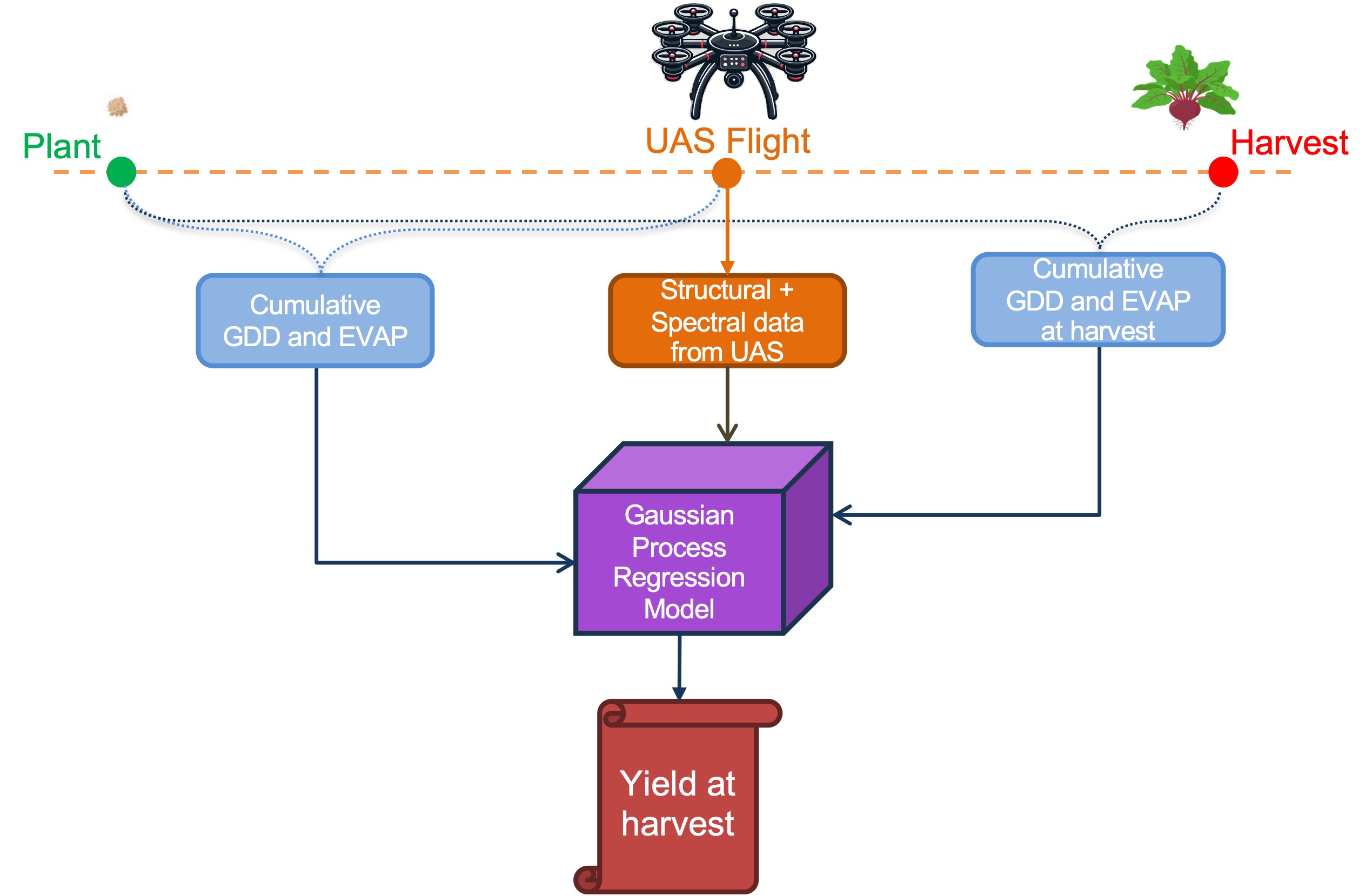

Unmanned Aerial Systems (UAS) have demonstrated substantial potential for enhancing agricultural monitoring, yet their application to root crops remains underexplored. Key challenges include developing models that are robust across growth stages and seasons, and evaluating the comparative utility of diverse imaging modalities. This study addresses these gaps by introducing a unified Gaussian Process Regression (GPR) model for predicting end-of-season table beet (Beta vulgaris) root yield using UAS-derived spectral and structural features, along with meteorological data. The model is designed to be resilient to variations in both flight scheduling and harvest timing.

Field trials were conducted at Cornell AgriTech, Geneva, NY, during the 2021 and 2022 growing seasons. Data acquisition included five-band multispectral imagery (475, 560, 668, 717, and 840 nm), hyperspectral imagery spanning 400–1000 nm, and light detection and ranging (LiDAR), captured at multiple phenological stages. The unified model yielded an R²test of 0.81 and MAPEtest of 15.7% using only multispectral data, while the fusion of hyperspectral and LiDAR data achieved an R²test of 0.79 and MAPEtest of 17.4%. Model interpretation via SHAP (SHapley Additive exPlanations) analysis identified canopy volume as a primary contributor to yield prediction performance.

This work demonstrates that scalable, sensor-agnostic machine learning models can deliver accurate, generalizable yield estimates for subterranean crops. The accompanying presentation slides correspond to our AGU24 talk, which focused specifically on the performance of the multispectral-based modeling approach. While the paper provides the entire analysis.