Unmanned Aerial Systems (UAS) have become essential tools in precision agriculture, offering flexible sensor integration, high-resolution data, and efficient field coverage. However, comparative evaluations of UAS imaging systems—particularly for root crop yield prediction and disease monitoring—are limited. This study assesses the performance of multispectral (MSI), hyperspectral (HSI), and LiDAR sensing for estimating table beet (Beta vulgaris) root yield and Cercospora Leaf Spot (CLS) severity.

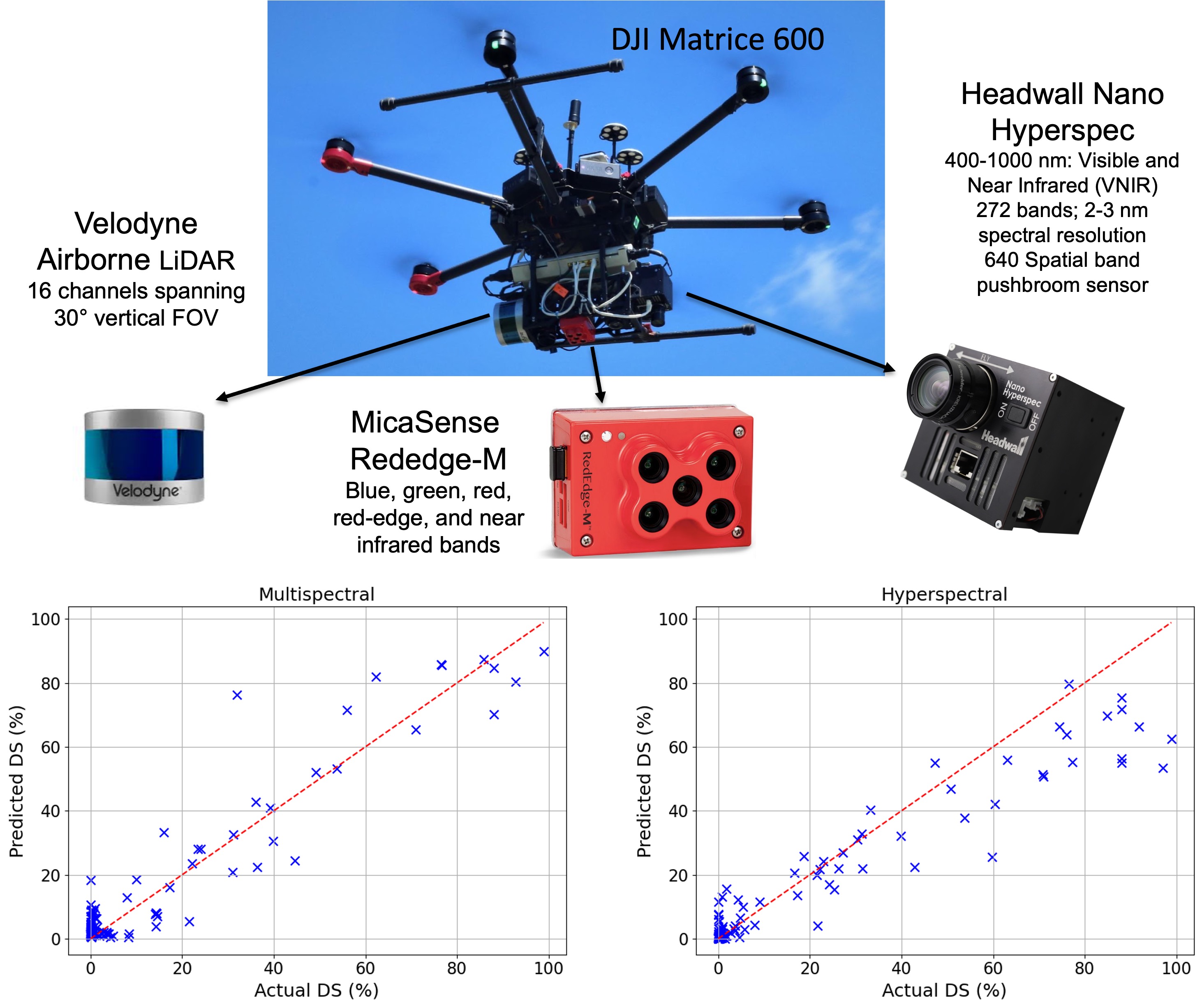

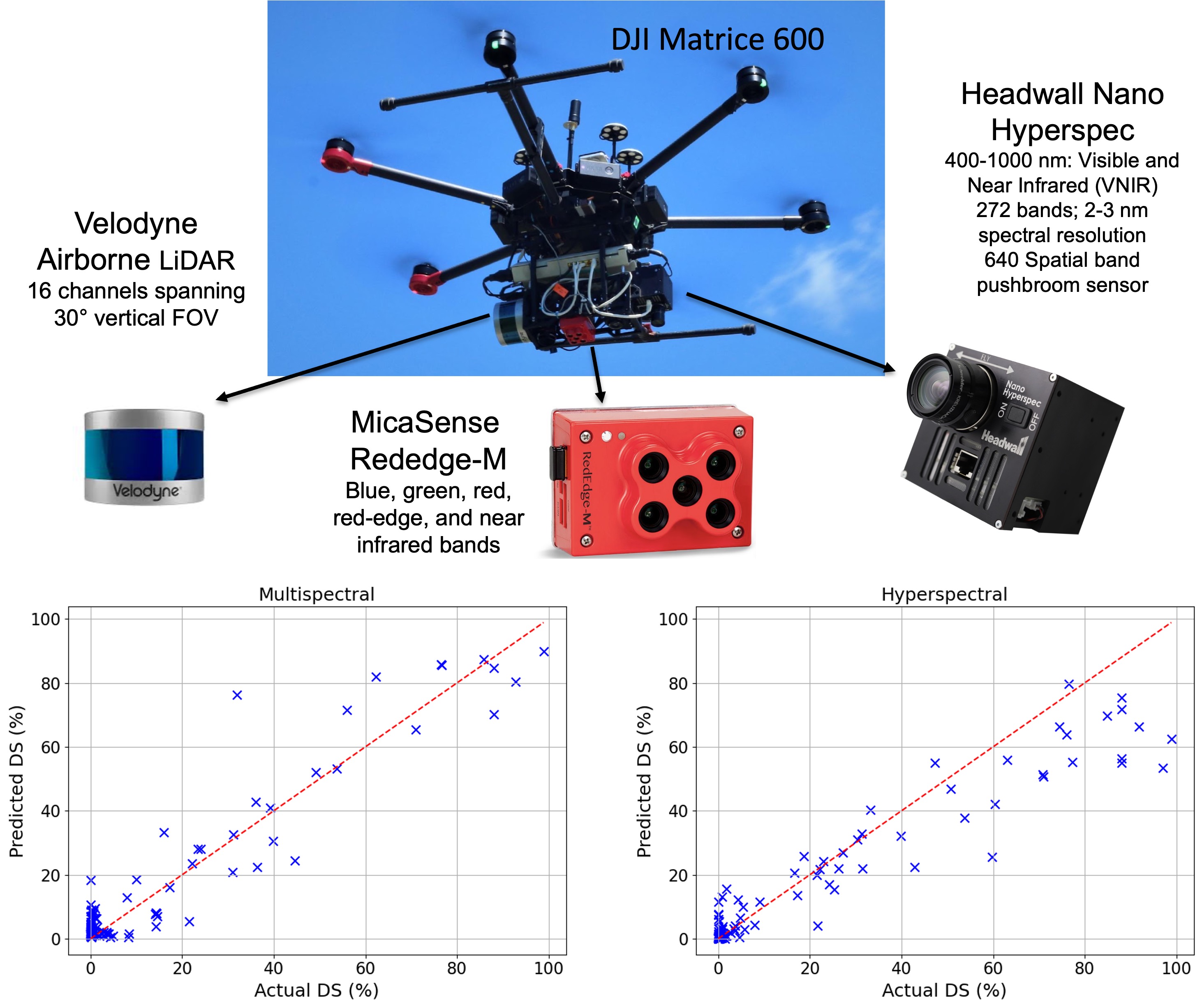

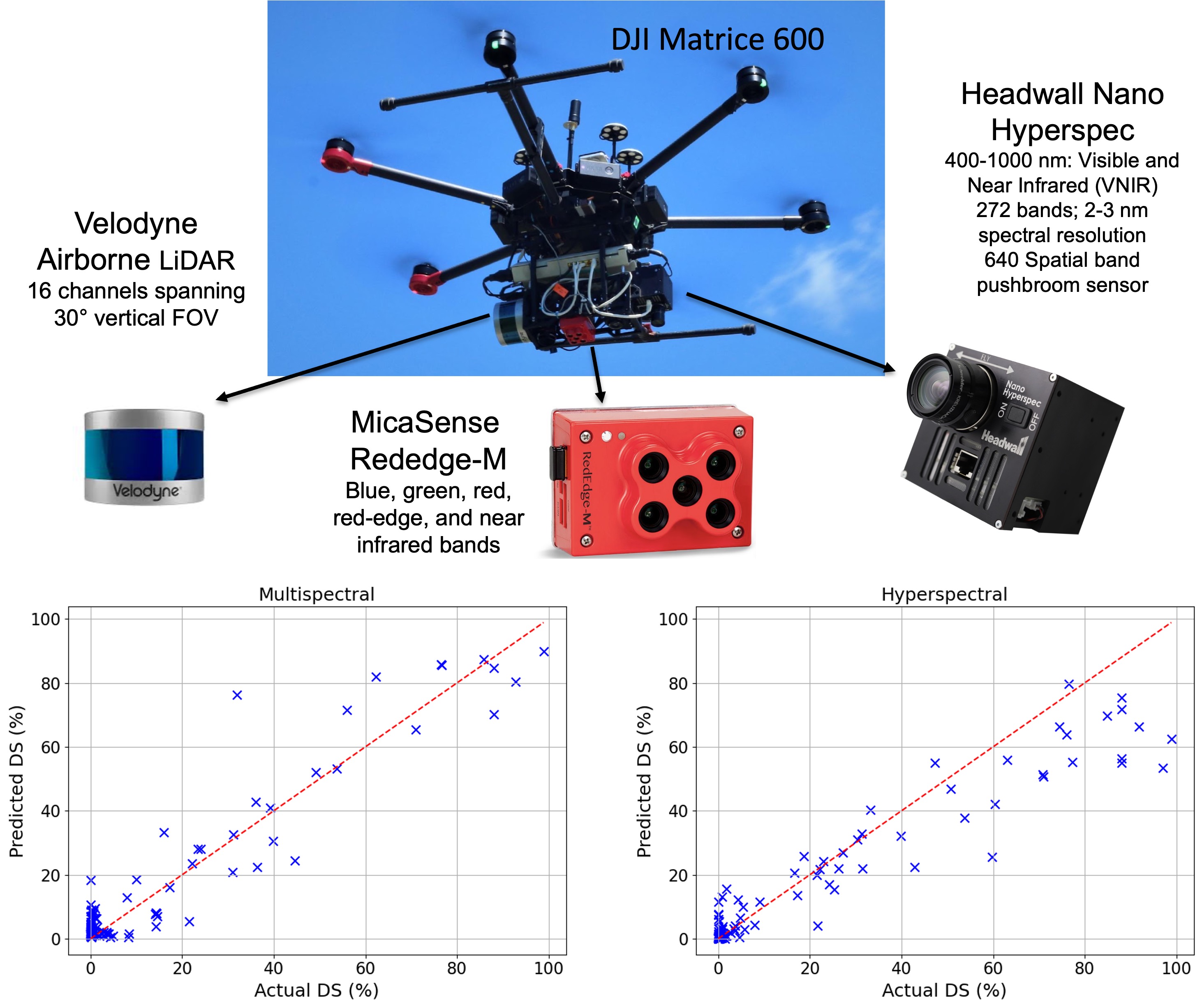

Field trials were conducted at Cornell AgriTech (Geneva, NY) during the 2021 and 2022 growing seasons. Data were collected using a five-band MSI sensor (MicaSense RedEdge-M), a VNIR HSI sensor (Headwall Nano, 272 bands), and a Velodyne VLP-16 LiDAR unit. Models were developed using both individual and fused sensor data. The MSI-only model achieved the best root yield prediction (R²test = 0.82, MAPEtest = 15.6%), while HSI+LiDAR achieved R²test = 0.79. CLS severity was modeled using vegetation indices and texture metrics, with MSI outperforming HSI (R²test = 0.90 vs. 0.87; RMSEtest = 7.18% vs. 10.1%).

Additionally, structural metrics derived via Structure-from-Motion (SfM) proved more informative than LiDAR for yield estimation. These findings highlight the practicality and effectiveness of MSI for both tasks, supporting its use in cost-efficient, scalable precision agriculture workflows.

This work provides a comparative framework for sensor selection in UAS-based monitoring and contributes to advancing sustainable, data-driven crop management.